OPQ Makai

OPQ Makai is a set of distributed services which together are responsible for aggregating and processing the measurements generated by the OPQ Boxes. OPQ Makai is made up of three software components, each responsible for a specific task in the OPQ ecosystem. These components are:

- Triggering Broker: receives low fidelity PQ data (frequency, voltage, THD) from OPQ Boxes.

- Acquisition Broker: requests and receives high fidelity PQ data (waveforms) from OPQ Boxes.

- Triggering Service: performs analyses in order to decide whether or not to request high fidelity data from one or more OPQ Boxes

Makai uses 0MQ for transport and protobuf for serialization. This allows easy integration with heterogeneous and polylingual systems, such as OPQ Mauka and OPQ View. The block diagram of Makai components is shown bellow:

Triggering Broker

The Triggering Broker is used as an endpoint for the low fidelity data (frequency, RMS voltage, and THD measurements ) generated several times per second by OPQ Boxes. It serves as a endpoint for the rest of the OPQ infrastructure for receiving the OPQ Box triggering data stream. After receiving low fidelity OPQ Box data, it forwards the data to the Acquisition Broker for further analysis.

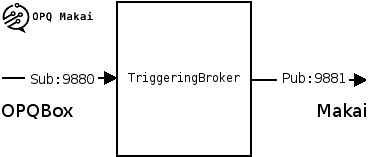

Every OPQ Box that connects to the Trigger Broker must be authenticated using the 0MQ security suite (CurveMQ). In order to do that, a public key of every OPQ Box must be provided to the Triggering Broker at startup. Furthermore, in order to authenticate the Triggering Broker, its public key is distributed to every OPQ Box. The Triggering Broker uses a SUB socket pair for receiving data from the box and a PUB socket from forwarding data further down the line. A block diagram of the triggering broker is shown below:

Installation

The Triggering Broker requires the following C/C++ libraries:

- ZeroMQ >= v4.2: Installation instructions

- zmqpp >= v4.2 : Installation instructions

Furthermore the triggering broker requires gcc >= v6.3, as well as a recent version of cmake.

If you would like to build the Triggering Broker without using build_and_install.sh, then follow these steps:

cd TriggeringBroker/: switch to the triggering broker directory.mkdir -p build: create a directory where the build will take place.cd build: switch to the build directory.cmake ..: run cmake pointing to the source of the triggering broker.make: build the binary.

This will generate the TriggeringBroker binary. Follow the directions in the Software Services section for configuration.

Configuration

By default the Triggering Broker will look for its configuration file in /etc/opq/triggering_broker.json. A default configuration file is shown below:

{

"client_certs": "/etc/opq/curve/public_keys",

"server_cert": "/etc/opq/curve/private_keys/opqserver_secret",

"interface": "tcp://*:9880",

"backend" : "tcp://*:9881"

}

Fields are as follows:

- client_cert : directory containing public keys of the OPQBoxes.

- server_cert : private key of the Triggering Broker.

- interface : endpoint for the OPQ Box.

- backend : endpoint for the cloud infrastructure

Interface

In order to communicate with the Triggering Broker, connect to the backend port using a ZMQ SUB socket with a language of your choice and subscribe to the OPQ Box IDs you would like to receive data from. Alternatively, an empty subscription will receive data from all devices. Each message contains two frames. The first frame is the Box ID encoded as a string. The second frame contains a protobuf encoded Trigger Message described in the Protocol section.

Acquisition Broker

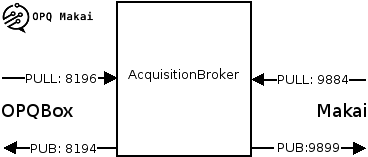

The Acquisition Broker is a microservice responsible for high fidelity (raw waveform) data acquisition from the OPQ Boxes. Similarly to the Triggering Broker, all communication between an OPQ Box and the Acquisition Broker is authenticated and encrypted using CurveMQ. A diagram of the Acquisiton Broker interfaces is shown below:

OPQ Boxes connect to the Acquisition Broker's PUB interface and wait for raw data requests. Once a raw data request arrives, it is forwarded to the Acquisition Broker via the PULL interface. Raw waveform data as well as the event request are logged in MongoDB. For the MongoDB schema data model, see the Data Model section.

Installation

The Acquisition Broker requires the following C/C++ libraries:

- ZeroMQ >= v4.2: Installation instructions

- zmqpp >= v4.2 : Installation instructions

- protobuf : Installation instructions

- mongocxx : Installation instructions

Furthermore the acquisition broker requires gcc >= v6.3, as well as a recent version of cmake.

If you would like to build the Acquisition Broker without using build_and_install.sh, then follow these steps:

cd AcquisitionBroker/: switch to the triggering broker directory.mkdir -p build: create a directory where the build will take place.cd build: switch to the build directory.cmake ..: run cmake pointing to the source of the triggering broker.make: build the binary.

This will generate the AcquisitionBroker binary. Follow the directions in the Software Services section for configuration.

Configuration

By default, the Acquisition Broker will attempt to load a configuration file located in /etc/opq/acquisition_broker_config.json. Alternatively, a configuration file can be passed as a command line argument.

{

"client_certs": "/etc/opq/curve/public_keys",

"server_cert": "/etc/opq/curve/private_keys/opqserver_secret",

"box_pub": "tcp://*:8194",

"box_pull": "tcp://*:8196",

"backend_pull": "tcp://*:9884",

"backend_pub": "tcp://*:9899",

"mongo_uri": "mongodb://localhost:27017"

}

The fields are as follows:

- client_certs : path to a directory containing OPQ Box public keys.

- server_cert : path to the server private key.

- box_pub : a PUB interface for the OPQ Box.

- box_pull : a PULL interface for the OPQ Box.

- backend_pull : an OPQ Hub PULL endpoint.

- backend_pub : an OPQ Hub PUB endpoint.

- mongo_uri : MongoDB host and port.

Interface

In oder to initiate an event acquisition, a client connects to the Acquisition Broker's PULL interface and sends it a protobuf encoded ReqtestDataMessage. Furthermore, any client connected to the PUB interface will be notified of a new event by receiving a text encoded event number.

Triggering Service

The Triggering Service is responsible for analyzing the triggering streams from the OPQ Boxes and requesting raw waveforms whenever an anomaly is detected. Furthermore the Acquisition Service maintains the measurements and trends collections. Measurements are a copy of the measurement stream, maintained in the database for only a 24 hour interval, while trends are persistent averages of the measurements stream over a 1 minute window.

The Triggering Service does not have any inherent logic for triggering stream analysis. Instead, it relies on analysis plugins loaded from dynamic libraries to analyse the incoming measurements and locate anomalies. This allows multiple analyses. To accomplish this, the Triggering Service consists of three parts:

- makai daemon : the daemon responsible for measurement acquisition, plugin management and communication with the Acquisition Service.

- opqapi shared library : a shared library that defines the plugin interface and communication data structures.

- plugins : a set of dynamic libraries which implements the plugin interface responsible for the triggering stream analysis.

A block diagram of the makai daemon is shown below:

Installation

The Triggering Service is written in Rust, and as such most of the dependency management is satisfied via cargo. However there are a few cases where native rust code does not exists for certain libraries. Instead, we use a shim package which wraps a C library. The list of C libraries is shown below:

- protobuf : Installation instructions

- ZeroMQ >= v4.2: Installation instructions

The Triggering Service also requires a stable branch of the Rust compiler.

To build the Triggering Service, cd into the TriggeringService directory and run the build.sh script. This will generate the makai binary as well as all the plugins in the build directory.

Configuration

The Makai daemon will look for its configuration file in /etc/opq/makai.conf, unless it's passed in as a command line argument. A typical configuration for the triggering service is shown bellow:

{

"zmq_trigger_endpoint" : "tcp://127.0.0.1:9881",

"zmq_acquisition_endpoint" : "tcp://127.0.0.1:9881",

"mongo_host" : "localhost",

"mongo_port" : 27017,

"mongo_measurement_expiration_seconds" : 86400,

"mongo_trends_update_interval_seconds" : 60,

"event_request_expiration_window_ms" : 1800000,

"plugins" : [

{

"path" : "/usr/local/lib/opq/plugins/libketos_plugin.so",

"script_path" : "./main.ket"

}

]

}

The list of fields is shown below:

- zmq_trigger_endpoint : triggering service endpoint.

- zmq_acquisition_endpoint : acquisition broker end point.

- mongo_host : MongoDB host.

- mongo_port : MongoDB port.

- mongo_measurement_expiration_seconds : how long the measurements persist in mongoDB.

- mongo_trends_update_interval_seconds : the window width for trend averaging.

- event_request_expiration_window_ms : internal do not change.

- plugins : a list of plugins with object specific settings. The path field is required.

Developing new plugins

Rust

The Triggering Service is implemented in the Rust programing language. Thus, Rus is the most straightforward way to develop triggering service plugins. A simple example of a plugin writen in Rust is found here.

The only requirement for a plugin is a struct that implements the MakaiPlugin traits, and that the library includes the declare_plugin! macro for bringing this struct across the rust FFI boundary into the daemon type system. Note that the data types TriggerMessage and RequestEventMessage are auto-generated by protobuf and their description can be found in the protocol section.

C/C++

Since Rust FFI is designed to interoperate with C/C++ it is quite easy to develop analysis plugins in C/C++. The only requirement is a Rust shim which implements the correct traits and defines the translation between the Rust and C/C++ types.

Other languages

For a natively compiled language, as long as the language has a C/C++ binding, that binding can interplay with Rust. Again the only requirement is a Rust shim which translates the rust types into C/C++ types and finally into the types of a language of your choice. Many interpreted languages such as Python and Javascript have rust bindings for their virtual machines. An example of embedding a VM into the triggering service plugin is shown here. This plugin embeds a Lisp VM into a Rust plugin to provide type translation and runtime Lisp-based analysis and triggering. A similar plugin can be developed for any interpreted language with Rust bindings.

Docker

Please see Building, Publishing, and Deploying OPQ Cloud Services with Docker for information on packaging up this service using Docker.